The search giant Google’s multi-million dollar, roughly a decade-long effort, represents one of the few viable alternative chips to Nvidia’s powerful AI processors. The Ironwood Chip is a model designed for running AI applications, or inference, and is designed to work in groups of as many as 9216 chips, said Amin Vahdat, Google Vice President, Machine Learning, Systems, and Cloud AI. Alphabet unveiled its seventh-generation AI chip named IRONWOOD; which the company said is designed to speed up the performance of AI applications.

Ironwood is Google’s answer to the GPU crunch. The latest (Tensor Processing Units) TPUs will be available to Google Cloud customers later this year, excerpts Google. Without further ado, let us dive straight into this massively exquisite AI chip that promises more than already appears on the surface.

Google’s Latest Feat

Google released Ironwood, its own advanced version of AI processing processor, the TPU. It is the seventh-generation TPU that has been specifically designed for inference. This target facilitating powerful thinking with inferential AI models at scale. For more than a decade, TPUs have powered Google’s most demanding AI training and serving workloads. These have enabled Google’s Cloud customers to perform the same with ease. Ironwood is considered to be the most powerful, energy-efficient TPU yet; that is purpose-built to think powerfully. It would be great to understand how Google evolved to this level of precision in AI chip technology.

Current Capabilities

‘Thinking Models’ have become viciously smarter and commonplace at Google. Yes, you read that right! Ironwood is designed to gracefully manage the complex computation and communication demands of smarter models that encompass large language models (LLMs), Mixture of Experts (MoE), and Advanced Reasoning Tasks. Ironwood is designed to minimize data movement and latency on chip while carrying out massive tensor manipulations. These models are facilitated by parallel processing and efficient memory access. At the outset, the computational expectations of these powerfully thinking models extend beyond the capacity of a single chip. Ironwood is designed keeping in mind diverse facets, including low latency, and high bandwidth ICI network to facilitate and support coordinates and well-synchronized communication at full TPU pod scale. Ironwood is available in two sizes based on AI workload demands:

Decade Old TPU Extravaganza

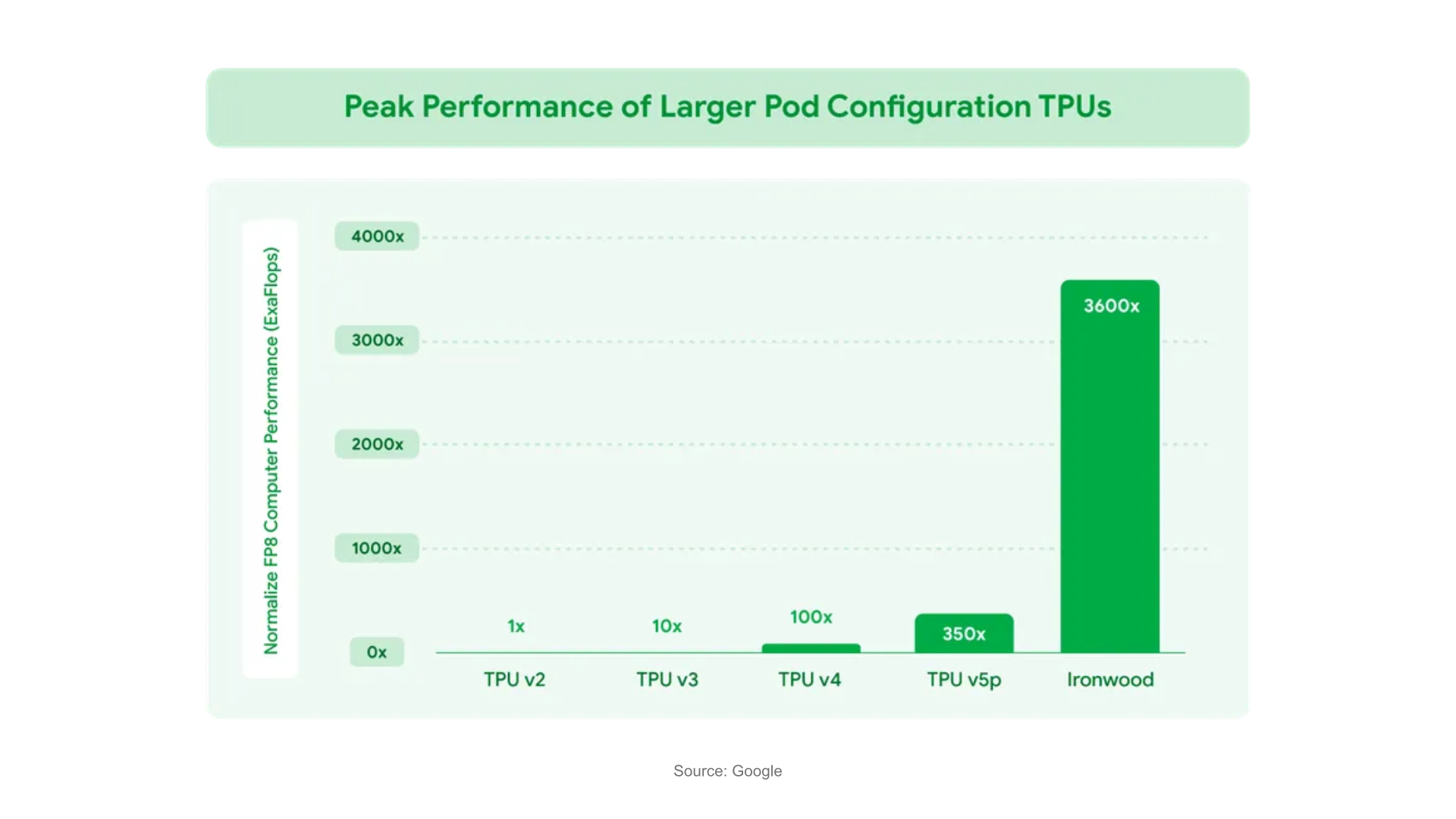

This representation by Google highlights the improvement in total FP8 peak flops performance relative to TPU v2, Google’s first external Cloud TPU.

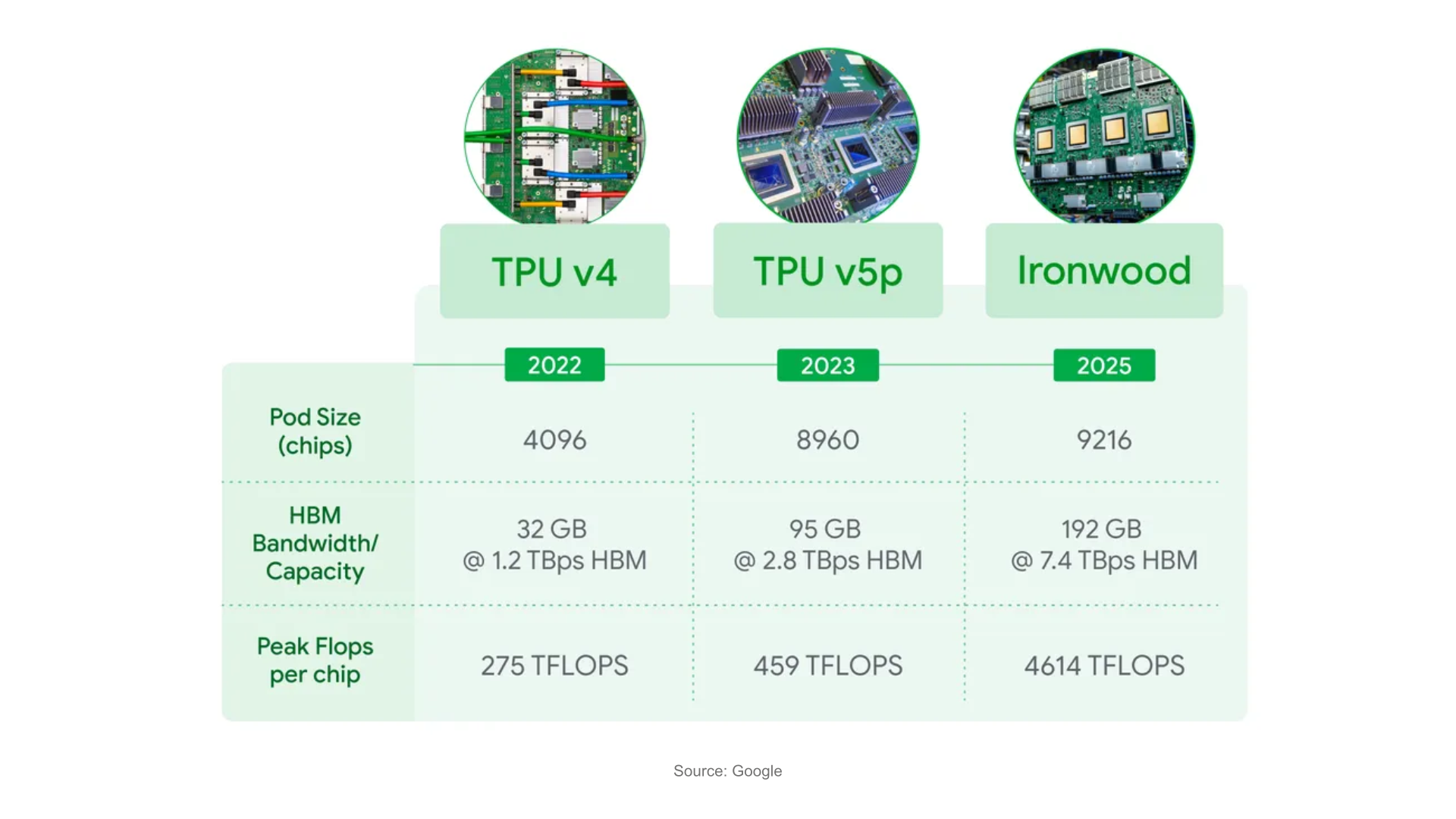

The side by side comparison above reflects upon the massive capabilities that Ironwood AI chip is capable of facilitating as compared to its former ancestors.

Core Features:

1. When scaled to 9216 chips per pod for a total of 42.5 Exaflops; Ironwood supports more than 24 times the compute power of the world’s largest supercomputer- El Captain

2. Ironwood delivers massive parallel processing power necessary for the most demanding AI workloads

3. Each individual chip boasts peak compute of 4614 TFLOPs; inferring astounding AI potentialities

4. It features an enhanced SparseCore, a specialized accelerator for processing ultra-large embedding common in advanced ranking and recommendation workloads.

5. Google’s own ML runtime developed by Google DeepMind enables efficient distributed computing across multiple TPU chips

6. It enables hundreds of thousands of Ironwood chips to be composed together to rapidly advance the frontiers of Generative AI Computation

Former TPUs vs Trillium vs Ironwood

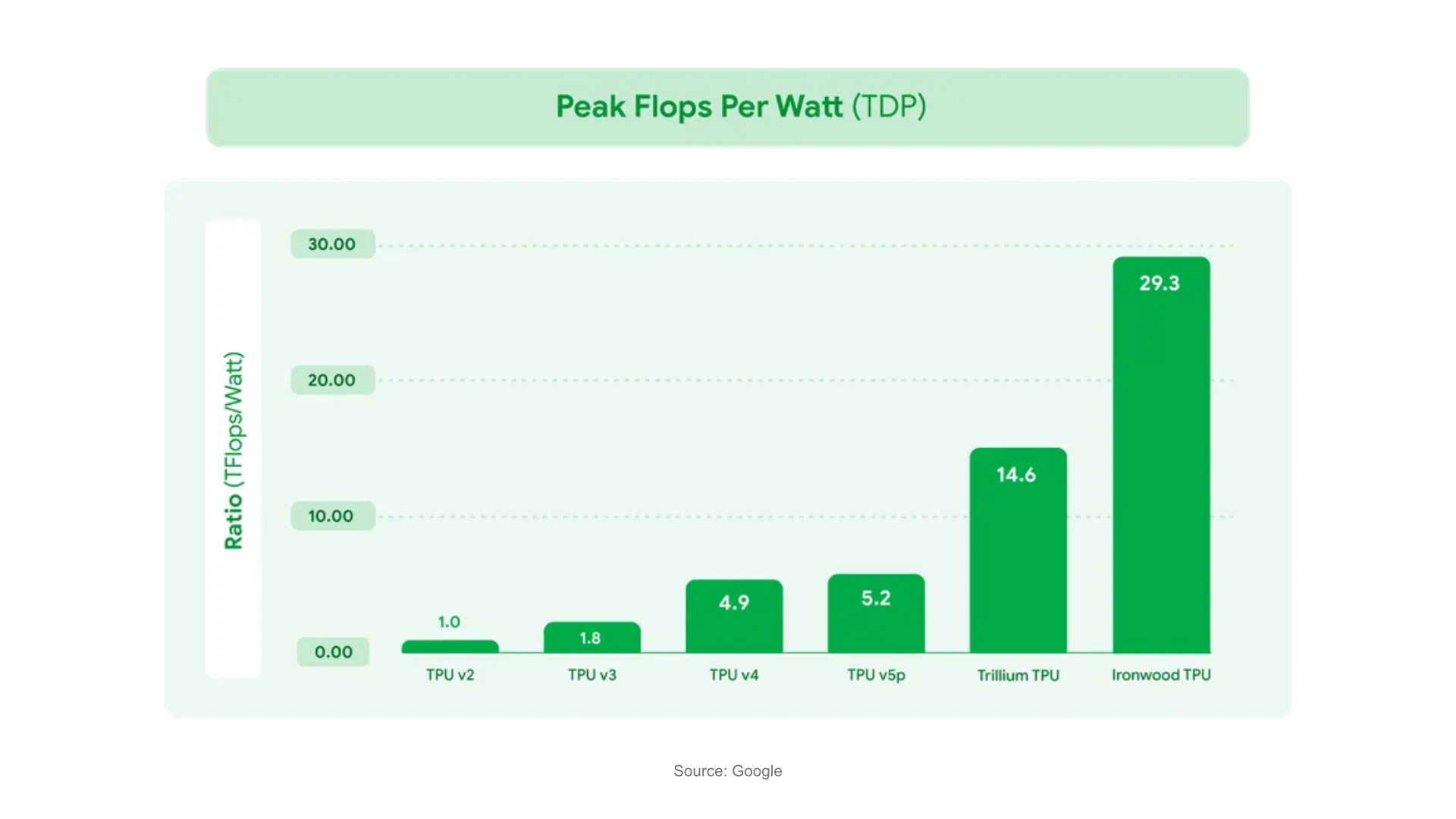

This is a clear reflection of the enhanced capabilities that Ironwood brings to the table; as against its former counterparts. This is a sheer reverence of Google’s latest TPU power efficiency relative to the earliest generation Cloud TPU v2. Measured by peak FP8 flops delivered per watt of thermal design power per chip package.

The Future Turn

As Google’s decade-long custom chip strategy reaches maturity; the TPU journey represents one of the longest-running custom silicon projects in AI; with internal developments originating in 2015 at Google. The current performance leap is substantial as Ironwood’s 9216 chip configuration delivers 42.5 Exaflops of computational power, theoretically surpassing the world’s largest supercomputers in AI-specific tasks. The future also looks at the AI accelerator chip manufacturers from around the world for further feat. Playing against Nvidia, Cerebras, Qualcomm, AMD, Graphcore, and TSMC would be a tough nut to crack for Google. As the world accepts Google’s latest Ironwood TPU with open arms, the future is Google-Powered!

Google’s AI Hypercomputer is an exemplary revelation of super-computing system; that optimizes to support your artificial intelligence and machine learning workloads. It uses best practices and systems-level designs to boost efficiency and productivity across AI pre-training, tuning, and serving.

High-Stakes in Certified AI Prompt Engineers and ML Engineers

You must be thinking how the workforce need to evolve with this staggering leap in the AI chip technology? The answer is quite simple. Getting certified with the most evolved and current machine learning certifications can power AI Prompt Engineers way forward. With the right skills and strategies guided to these power-packed AI professionals; they will become invincible as the world opens gateways for greater AI ML Engineer career opportunities. This is the exact standpoint that necessitates the role of a legit and globally accepted AI certification that can guarantee a smooth sailing career with multitudinous opportunities opening your way. Keep scaling big and stay relevant with the latest in AI tech and skill sets with these credentials, and power AI industry like never-done-before!

Follow us: