Soon after its launch, the Chinese startup DeepSeek’s R1 AI assistant model has disrupted the global AI market and affected the stock markets around the world. Within 7 days of its launch, the chip giant NVIDIA lost nearly $600 billion from its market capitalization.

Not just that, it has already replaced leaders like OpenAI’s model ChatGPT and Google’s Gemini from the top position in the AppStore as the most popular AI chat tool. And this increasing popularity has managed to gain the attention of a wide range of consumers as well as cyber attackers.

However, this rapidly growing large language model (LLM) has also exposed the vulnerabilities the advanced AI models can have. Within the days of its launch, DeepSeek suffered a massive cyberattack that forced the Chinese AI company to stop new user registration.

This attack brings concerns regarding the security of AI platforms to the forefront and highlights how vulnerable the new and advanced AI systems can be to malicious actors, no matter how advanced, fast, and cost-effective these tools are.

Let’s dig deeper into this case and understand what the DeepSeek cyberattack is and why it is an alarming bell for AI developers, cybersecurity experts, and every individual AI user.

DeepSeek AI Attack

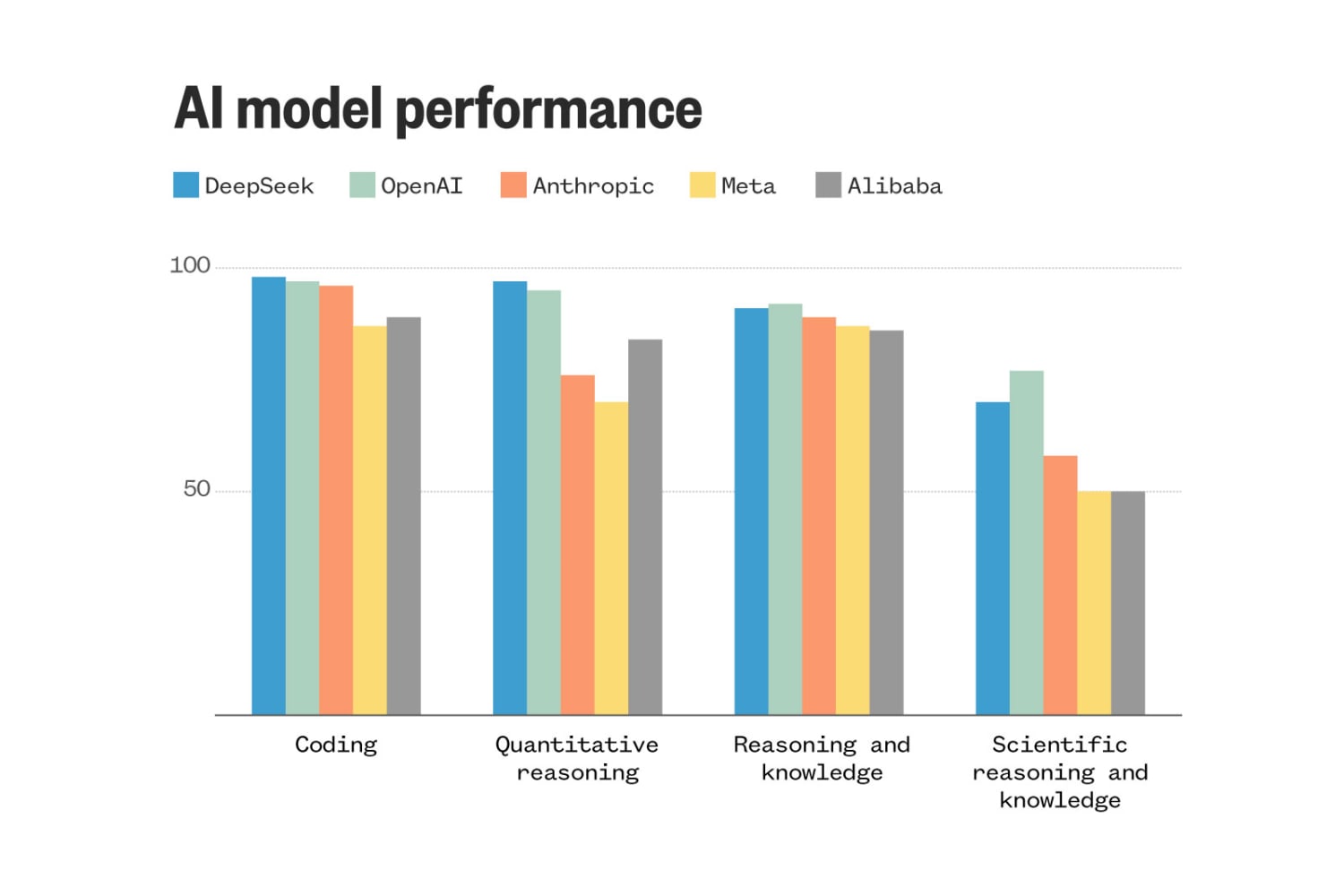

DeepSeek R1 gained its popularity as a cost-efficient large language model rivaling US giants like OpenAI and Google. It claimed to be the best AI model with minimum computational resources made from lesser advanced and fewer AI chips than employed by its competitors. DeepSeek is a Chinese AI company based in Hangzhou and founded by Liang Wenfeng in late 2023.

Source: NBC News

Recently, it faced a series of sophisticated cyberattacks which began in early January 2025 and then escalated in both scale and complexity introducing a great challenge to DeepSeek’s operations and data security.

XLab, a Chinese cybersecurity company that was closely monitoring the attacks stated that attackers employed a variety of methods including:

Since the attackers changed their attack methods over time, it became even more difficult for DeepSeek to protect against them promptly. This forced the AI company to temporarily restrict registrations on its platform for smoother service.

Vulnerabilities in DeepSeek Exposed

Cybersecurity experts and researchers were able to identify several vulnerabilities in the DeepSeek model. KELA a cybersecurity firm was able to jailbreak this platform and make it generate malicious outputs such as developing ransomware, creating toxins, and even fabrication of a few sensitive content.

Not just DeepSeek, even market leaders like ChatGPT are also targeted by criminals for their widespread adoption and huge data access.

So, what does this mean?

It is a kind of wake-up call for all developers, users, and cybersecurity experts that with the evolution of AI platforms, even cyber threats are also evolving. So, all these stakeholders must be aware of the following cybersecurity concerns.

Raising Security Concerns for AI Platforms

The attack on DeepSeek AI has raised several security concerns for AI platforms and users must be aware that:

Many AI platforms collect personal user information such as name, email address, bank account details, etc. and a security breach could expose this sensitive information compromising user privacy.

Research has shown that AI models can be manipulated or jailbroken with little effort which can be misguided to generate harmful outputs. This vulnerability can be exploited by malicious actors and commit cybercrimes.

AI platforms are very critical infrastructure and cyberattacks can disrupt various essential services. For example, if there is any attack on an AI-powered healthcare system then it could put patient’s lives at risk.

Cybercriminals can use AI platforms to create highly convincing phishing campaigns and social engineering attacks which can prove to be efficient in deceiving and manipulating victims.

Vulnerable APIs that enable AI integrations can also be exploited by hackers. They can get unauthorized access to user data and platform functionalities to carry out their attacks.

Vulnerable AI platforms can also be used to automate malicious software (malware) generation making it easier for cybercriminals to streamline the overall development process.

These threats and concerns demonstrate why AI in cybersecurity is considered a double-edged sword.

How to Protect Users and AI Platforms from Cyberattacks?

It is the responsibility of developers and cybersecurity experts to secure AI platforms from infection and malicious applications. However, users must also perform their duty responsibly for maximum safety. Here are a few tips to think of:

Organizations must also ensure:

The DeepSeek cyberattack exposing a vulnerability in AI platforms is a wake-up call for everyone.

This is a reminder that no matter how advanced and secure an AI platform is, it can be vulnerable to malicious actors. Therefore, AI companies need to take the security of such models seriously and invest in robust security measures to protect their platforms from various kinds of attacks.

At the same time, users must also be aware of the cyber threats, and risks associated with AI models, and follow the best practices to keep themselves and their data secured.

Our collective efforts will ensure AI remains a beneficial technology for all.

Follow us: