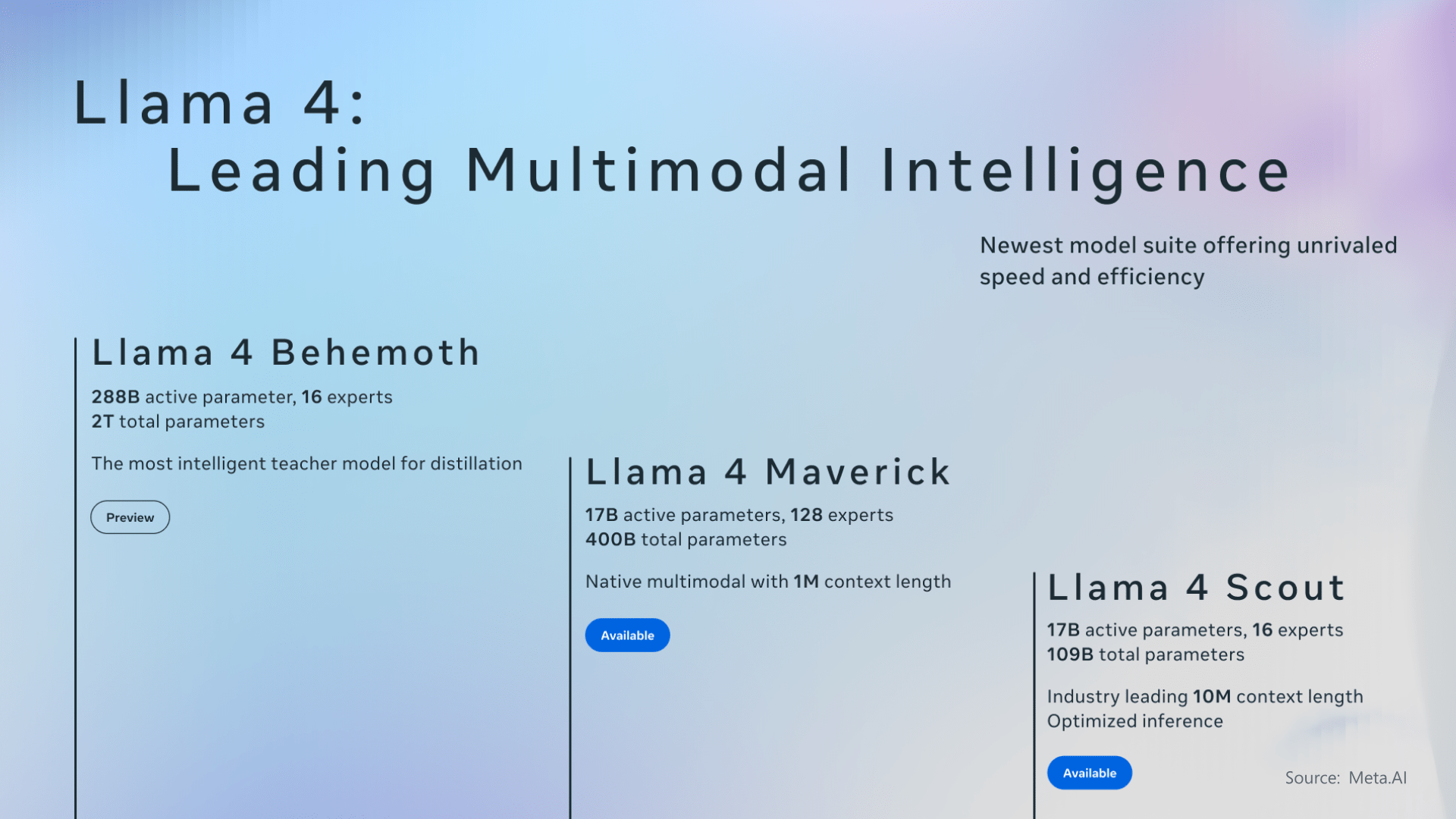

MetaAI is back in the news with its most recent release of AI innovation- Llama 4. Meta just dropped Llama 4, their most advanced open model, beating DeepSeek. Zuckerberg says the goal is clear- build the best AI, keep it open, and make it usable by everyone. In the wake of building the world’s leading AI, an open-source model that is universally accessible around the world, MetaAI has got meaty upgrades in the form of Llama 4 Behemoth, Llama 4 Scout, and Llama 4 Maverick.

These incredibly packed language models are a result of Meta going into panic mode upon learning that DeepSeek R1 had been trained for a fraction of the cost and made open-sourced. The AI team leaders at Meta juggled between ideas upon bringing forth an incredible breakthrough small language model, Llama, that percolates deep down the developers’ veins and performs to the best of its capabilities.

What is New Llama 4?

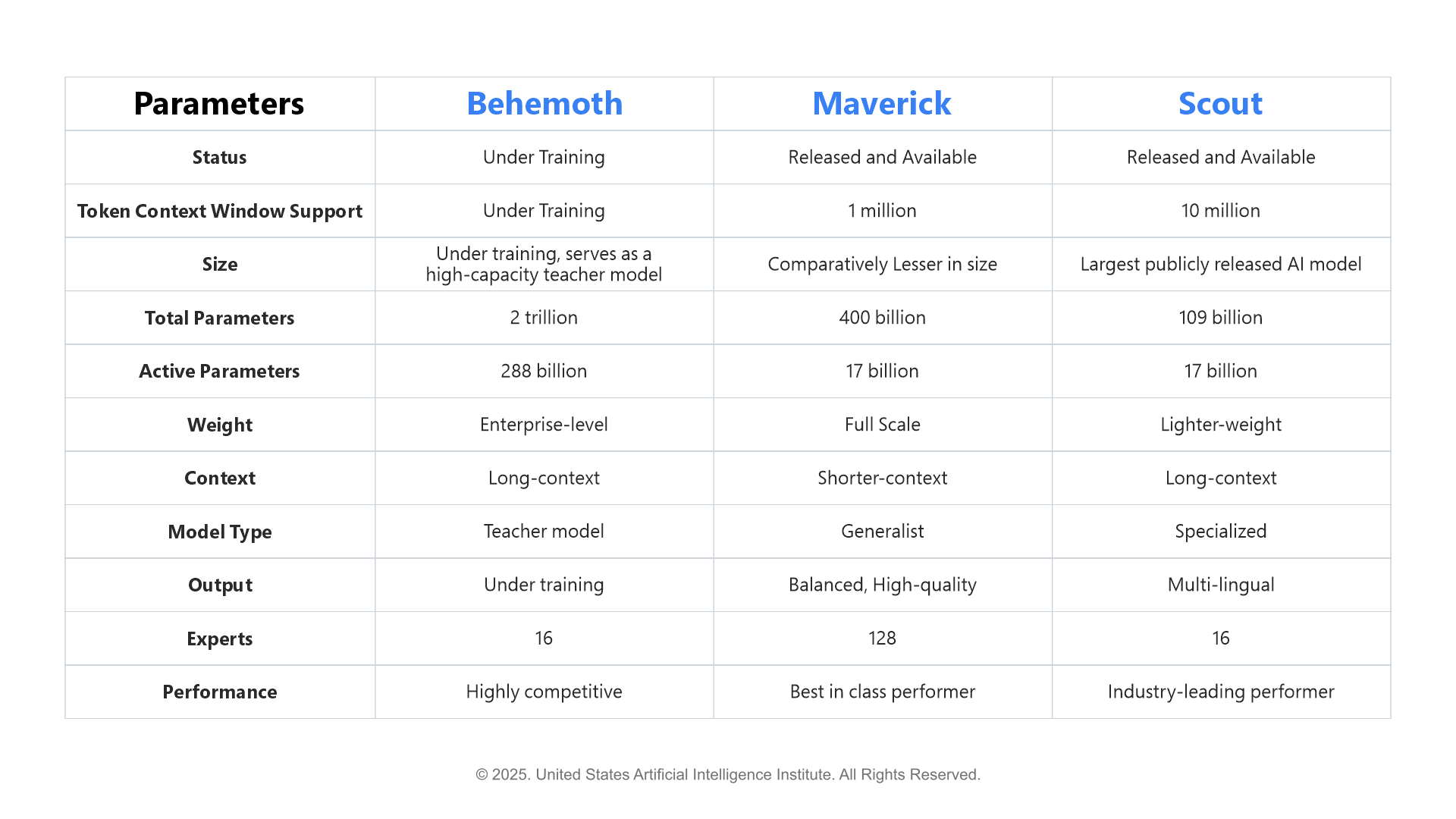

Meta’s Llama 4 models are built on one of the world’s most advanced large language models. The Llama 4 family includes Llama 4 Behemoth, Maverick, and Scout, which are natively multimodal models and have benchmark scores that put them ahead of competitors. Meta is said to have scrambled war rooms to decipher how DeepSeek lowered the cost of running and deploying models such as R1 and V3. The newness that Llama 4 brings to the table is the Mixture of Experts structure, pushing forward the frontiers of open-source generative AI models.

Quick Performance Metrics- Llama 4 Models

What is Mixture of Experts (MoE)- Llama 4 Architecture

The Mixture of Experts (MoE) model is a type of Sparse Transformer model that is composed of individual specialized neural networks called ‘Experts.’ MoE models possess a ‘router’ component that manages input tokens and which experts they get sent to. These specialized experts work together to offer deeper results and faster inference times, increasing both model quality and performance.

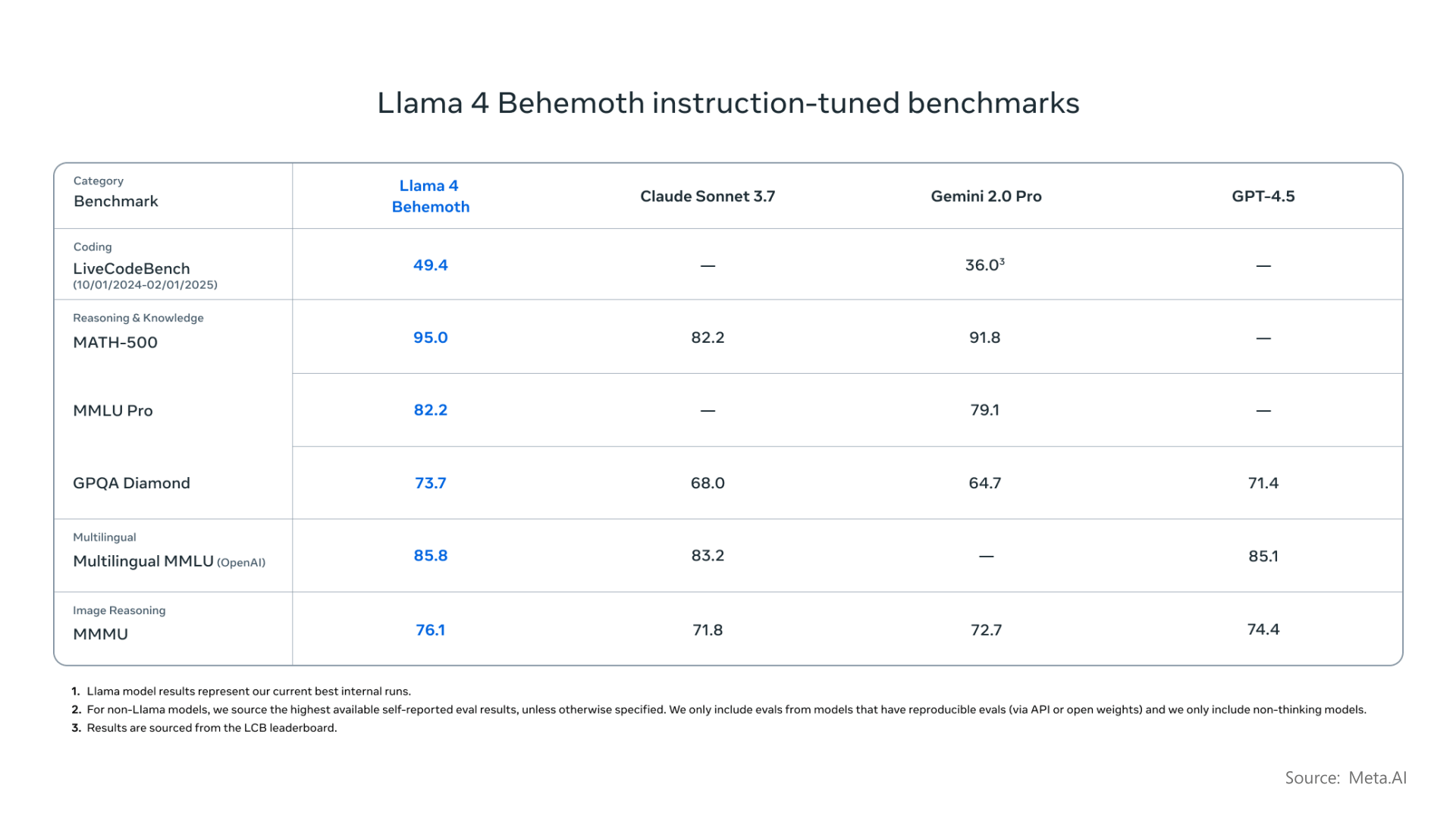

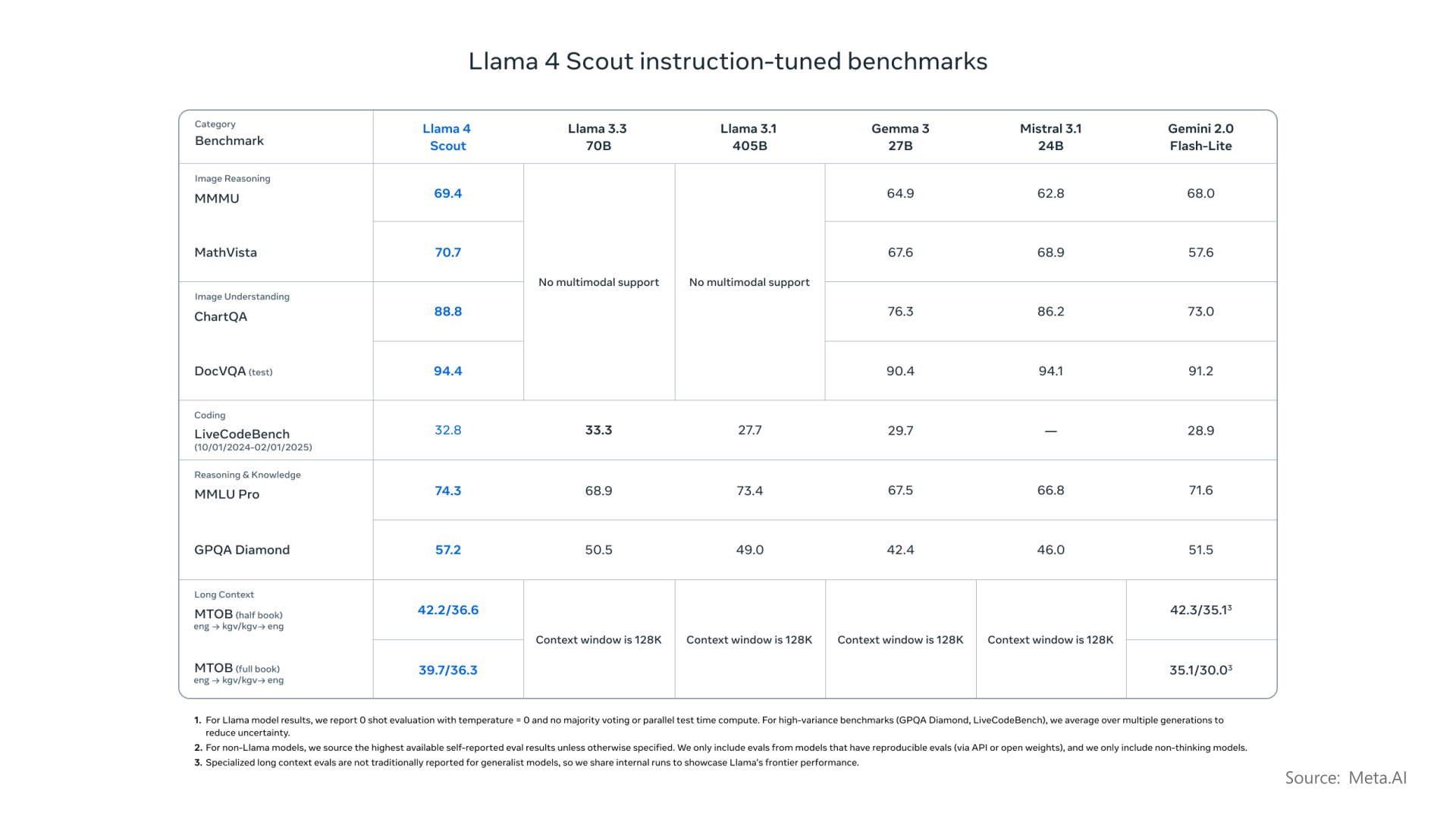

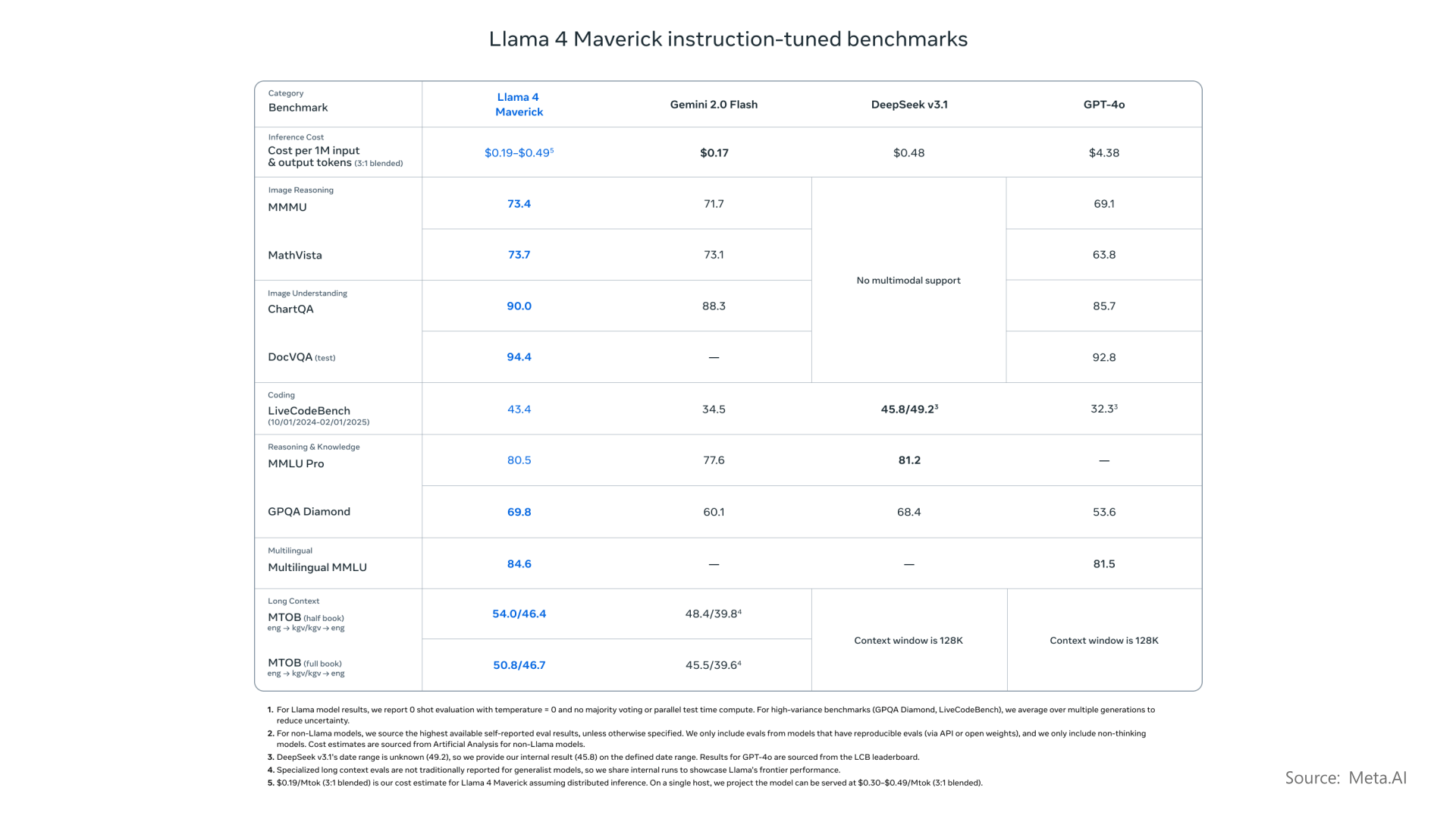

Performance Rating- Popular AI Models and Comparison (source: Meta AI)

The internal benchmark results by Meta (revealed above) highlight the overall performance of Llama 4 models, eventually comparing them to previous Llama variants and diverse competing open-weight and frontier models. Some observations include:

Challenges and Safeguards

Understanding the integration mitigations at each layer of model development, from pre-training to post-training, and tunable system-level mitigations that shield developers from adversarial users. this empowers AI developers to create helpful, safe, and adaptable experiences for the Llama-supported applications.

Can AI Prompt Engineers Rescue the Situation?

AI Prompt Engineers can significantly contribute to rescuing the situation by making sense of the AI interactions and outputs, thereby opening gateways to greater accuracy, relevance, and enhanced user experience. Prompt engineers must comprehend the core nuances of AI models, specific tasks that they are designed for, and the ways to translate user intent with clarity and effective prompts. This level of expertise can be achieved by efficiently getting certified with the best Generative AI certifications to guarantee upskilling of the aspiring industry expert with crucial AI engineer skills. Making getting certified a priority can make you an invincible addition to the expert tribe while deciphering astounding AI models such as Llama 4 and much more.

Want to try it now?

Accessing Llama 4 AI models is made easy by downloading the Llama 4 Scout and Llama 4 Maverick models on llama.com and Hugging Face. Try Meta AI built with Llama 4 in WhatsApp, Messenger, Instagram Direct, and on the Meta.AI website. Try to experience real-time!

Follow us: