In the epoch of the information revolution, Generative AI, or its abbreviation, AI and its (once only) parent Open AI has become ubiquitous with the big boys, even threatening industry titans the likes of Google and the Big Blue. A little about Chat GPT, it reached 1 passed 5 million users in its first week of release, it has currently garnered over 180 million users worldwide. To think of the business as a spaceship would not really be out of place.

Then came GPT 4, which Sam Altman himself called “the dumbest model you’ll ever use” and while Altman recently stated that Open AI was still not training GPT-5 “for some time”. This was probably in response to the open letter, signed by 1,100 signatories, including Elon Musk and Steve Wozniak, asking for further development of AI be halted after GPT 4. However, Sam Altman was not deterred.

Regardless of the industry pressure, Open AI kept pushing the boundaries.

And the result?

Welcome (soon), Chat GPT 5!

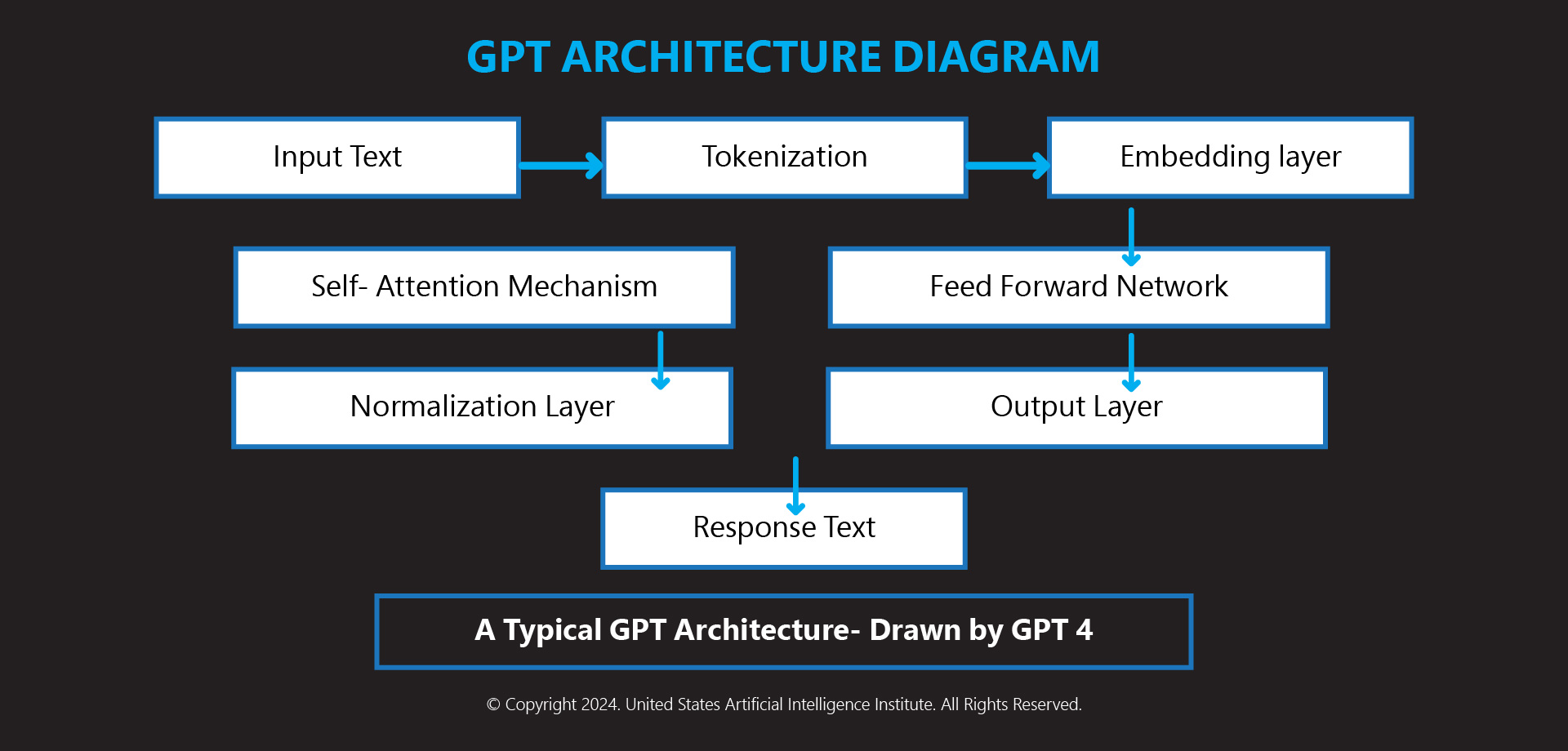

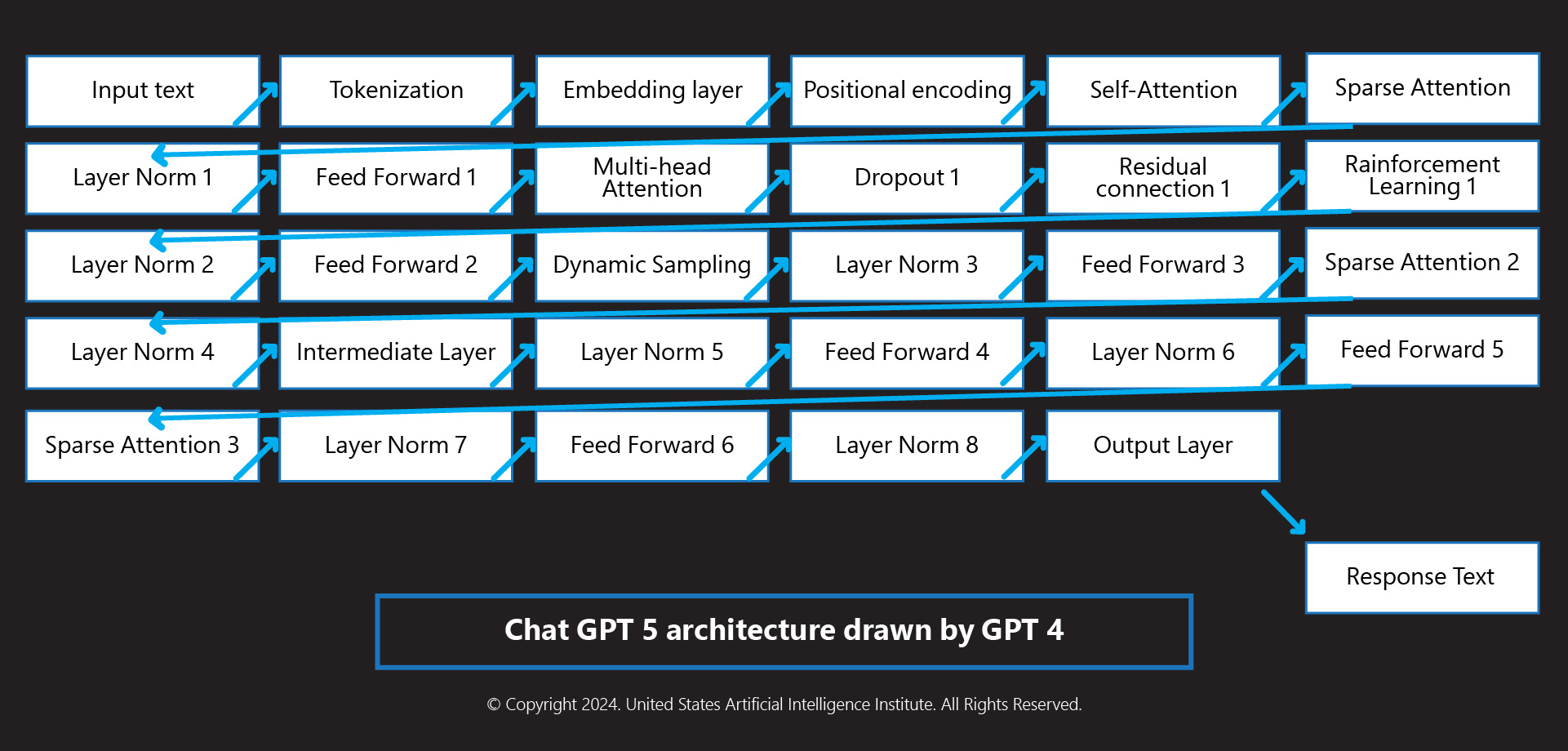

Comprehending the quintessential fundamentals of modeling of Chat GPT5, requires a combination of sophisticated algorithms and cutting-edge neural architectures. Based on the now ubiquitous transformer model. A transformer model with a highly advanced framework that has redefined the cutting edge of machine learning. This novel architecture enables a system to parse and generate human like text that is both coherent and contextual.

Why GPT 5 is critical to us – and Open AI.

When GPT 4 was called the worst GPT ever created, Open AI gulped its pride, and kept itself shut, even though the CEO himself shamed the product. Reviews like “The latest model is getting way lower quality and lazy. Doesn’t provide full answers. Doesn’t provide full code. Doesn’t even provide as high quality of a code. You have to really talk to it for an hour with so many questions to get a response out.” (Reddit user) flooded the internet.

While Altman is seeking a mind-bending 7 trillion in funding for semiconductors that can handle his GPT x (and, in the future, Gen AI?) workloads, Open AI is getting a bad rap for its data-gathering methodologies and ethics. Wrap all these factors and you will see that Open AI desperately needs a breakthrough right now. Sam Altman knows that, Microsoft and Google, though still playing catch up, know that as well.

Under the hood of GPT 5 (Guesstimates and other stories)

So, what’s inside the new shiny lunchbox? Besides the transformer model and the data powering it, whose architecture empowers the system to parse and generate human like text with unprecedented coherence and contextuality. It allows ChatGPT-5 to weigh the relevance of each word in a sentence relative to others, thereby capturing nuanced meanings and subtleties in human language.

GPT 5 Architecture the New (Not a) Kid on the block:

Alright, but what about training the model?

GPT 5 has been trained in humungous and colossal datasets whose sizes “have hitherto not seen before in any pre training or training” according to an Open AI employee. It involves the ingestion of massive datasets, spanning diverse domains and genres. This is a foundational bedrock for its subsequent fine-tuning. The pre-training process employs unsupervised and self-supervised learning techniques to understand language patterns, grammar, and context without human intervention.

Fine Tuning the Dataset

Fine tuning is a dataset is critical for any AI/ML model. In GPT 5, the journey begins with ingesting data, and this is where GPT-5 overshadows its younger, dumber sibling by leaps and bounds. A critical phase, fine-tuning involves supervised learning which imbibes iterative testing on specific tasks. This refines its capabilities to understand and respond to contextually rich queries with almost surgical precision.

Learning Shots

Unlike its predecessors, Chat GPT 5 brings with it the capabilities of few-shot and zero-shot learning. This is currently being touted as one of its most distinguishing features. Unlike its predecessors, Chat GPT 5 can learn and adapt to minimal samples, showing off its flexibility and efficiency in learning patterns and tasks. Zero shot learning takes it a step further, empowering the model to generate accurate responses, banking upon its pre-trained knowledge repository.

But isn’t Security a Primary concern in AI?

Cybersecurity has always been a bone of contention in the AI world. Chat GPT 5 mitigates this risk by imbibing certain advanced security features like:

WHAT ELSE IS IN THE BOX?

Reinforced Learning through human feedback will play a huge role in the GPT 5 Training process, at unprecedented levels. After drawing flak for censorship and ethics, this model is expected to have almost human level ethics in security, with ethical training and Continuous Improvements as crucial factors, including user security, being primary focus areas.

Besides these, GPT 5 will also need user authentication to access, hitherto unseen in most models. Other security components include:

Rate Limiting – preventing the misuse or overuse of the model

Data Anonymization- Of course, by now, GDPR should be in everyone’s mind as far as AI goes, but GPT 5 is expected to be GDPR and CCPA compliant

Besides these, GPT5 will also feature other newer, cutting-edge security features including robust monitoring and logging, anomaly detection, input sanitization, securely deployed containerization, regular patching, adversarial robustness and the ability to counter adversarial attacks in real-time.

Rounding Up GPT 5

Expected to be released in late 2024 or early 2025, pricing is expected be around the same as the current models for Ada, Babbage, Curie and Da Vinci offering token lots for subscription, though Open AI has not released the pricing of the API yet. So, the much-promised conclusion –

GPT 5

GPT 5 truly promises to be an embodiment of sophisticated models and neural architectures. Expected to be released early next year with the same pricing models as GPT 4, it will epitomize the zenith of advanced neural network architecture, data processing, and sophisticated learning. It is a testament to the zenith of human ingenuity and is expected to be a monumental leap in design, functionality and transformative potential.

With the advent of these new GPT 5 AI models, getting professionally certified in AI and continuous learning is a no-brainer. In the labyrinth of modern technology, growth, and success require professional generative AI certification programs that will be pivotal in enabling humans to harness new technologies, continuously evolving. Let’s await the release of GPT 5 and see how transformative and evolved Open AI has become.

Follow us: