When it concerns Retrieval Augmented Generation, there is more to it than what meets the eye! The field of natural language processing has witnessed significant advancements in recent years, with the emergence of transformer-based architectures and the development of sophisticated language models. One of the most exciting developments in this space is Retrieval Augmented Generation (RAG), a novel approach that combines the strengths of knowledge retrieval and text generation to produce more accurate, informative, and engaging text.

What RAG started as?

Patrick Lewis, lead author of the celebrated research paper “Retrieval-Augmented Generation for Knowledge-intensive NLP Tasks” in 2020 thought of this term. RAG is a technique for enhancing the accuracy and reliability of generative AI models with information fetched from specific and relevant data sources. It fills the gap in how large language models (LLMs) work. LLMs are neural networks, typically measured by how many parameters they contain. An LLM’s parameters essentially represent the general patterns of how humans use words to form sentences.

Critical Limitations of Traditional Large Language Models

Traditional language models, such as those based on recurrent neural networks (RNNs) or transformers, rely on statistical patterns and associations learned from large datasets to generate text. While these models have achieved remarkable success in various NLP tasks, they suffer from several limitations:

The Emergence of Retrieval Augmented Generation

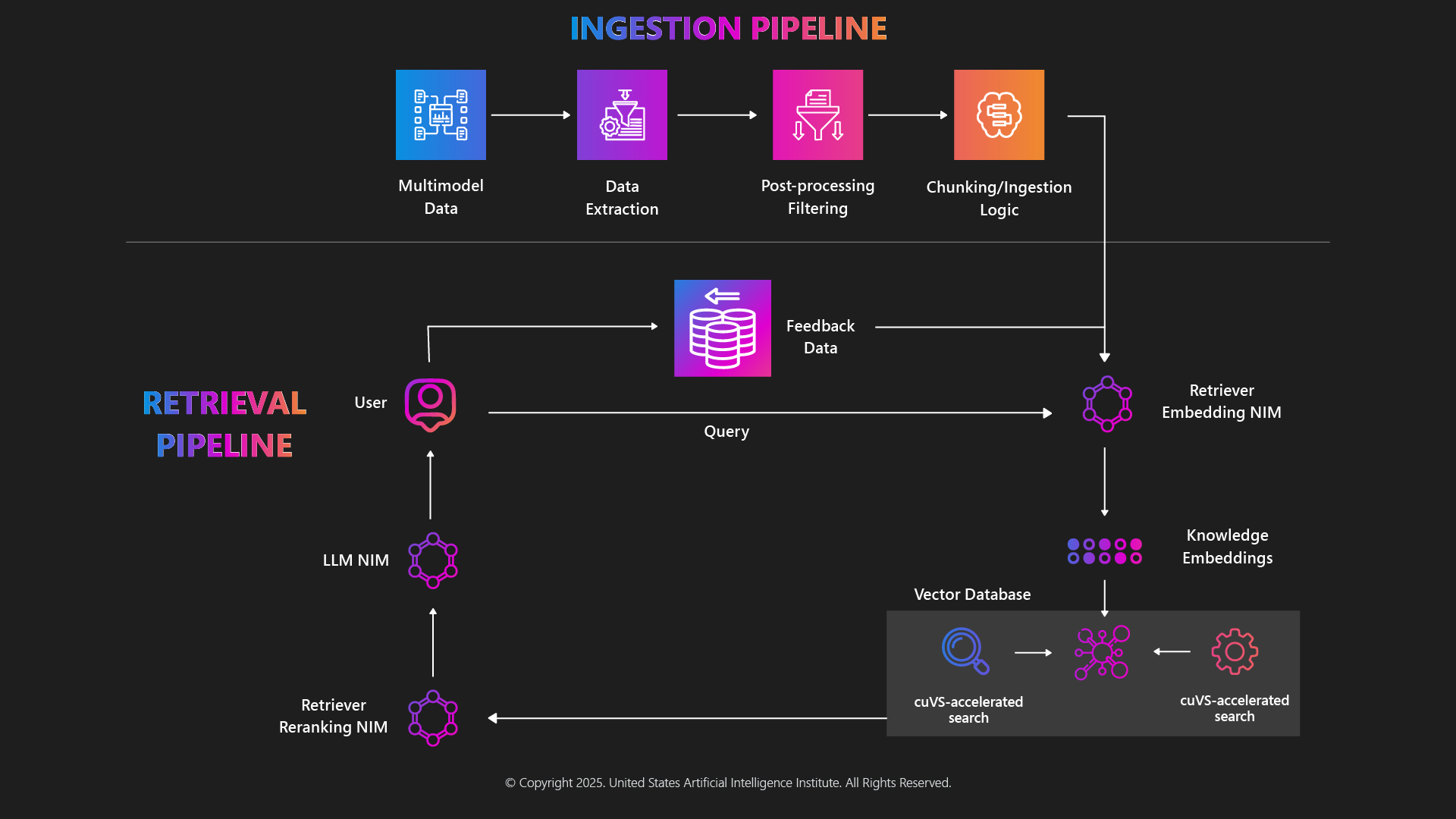

Retrieval Augmented Generation (RAG) addresses the limitations of traditional language models by incorporating knowledge retrieval into the text generation process. RAG models consist of two primary components:

How Does RAG Improve an AI Model’s Contextual Understanding?

With RAG, an LLM can go beyond training data and retrieve information from a variety of data sources, including customized ones. By combining advanced information retrieval with natural language generation, RAG can significantly improve the accuracy, reliability, and contextual understanding of AI outputs, helping to overcome critical limitations of large language models (LLMs). Let us look at it from close by:

Chatbots and virtual assistants, question-answering systems, and content summarization are some of the scenarios where RAG improves contextual understanding manifold.

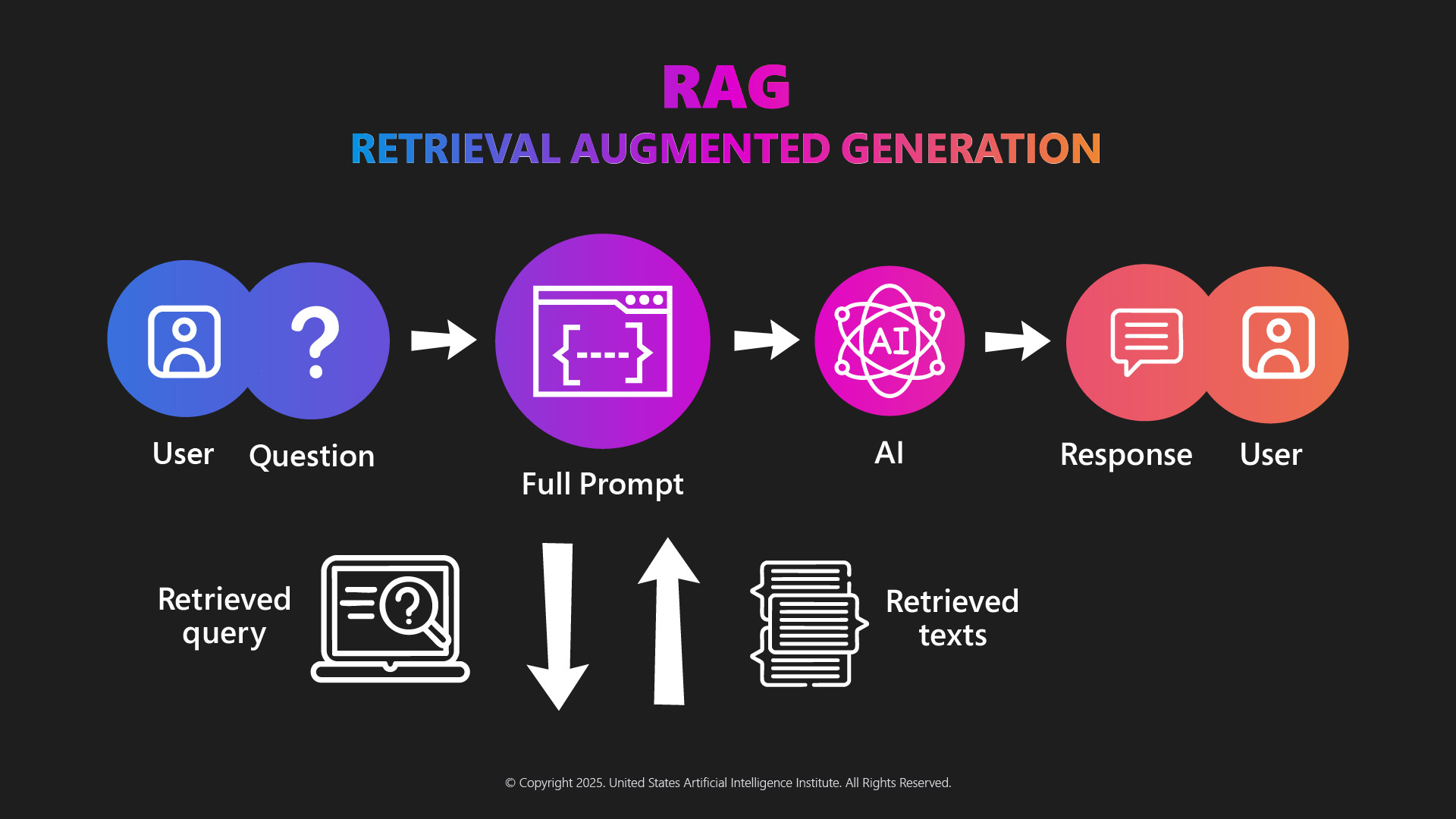

How RAG Works?

The RAG process involves the following steps:

Henceforth, the text response is generated; targeting the fulfillment of the task.

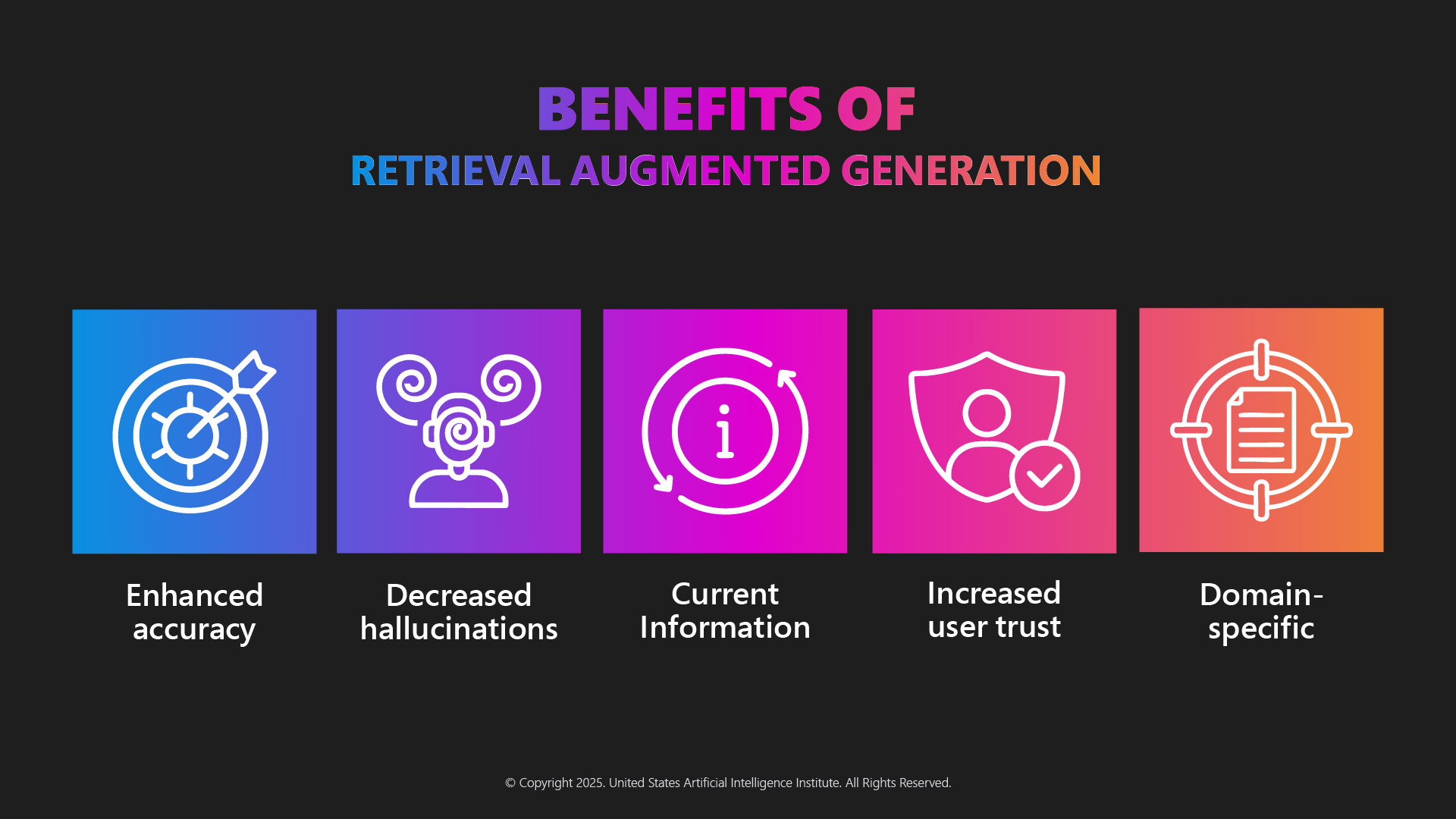

Benefits of RAG:

RAG offers several benefits over traditional language models, including:

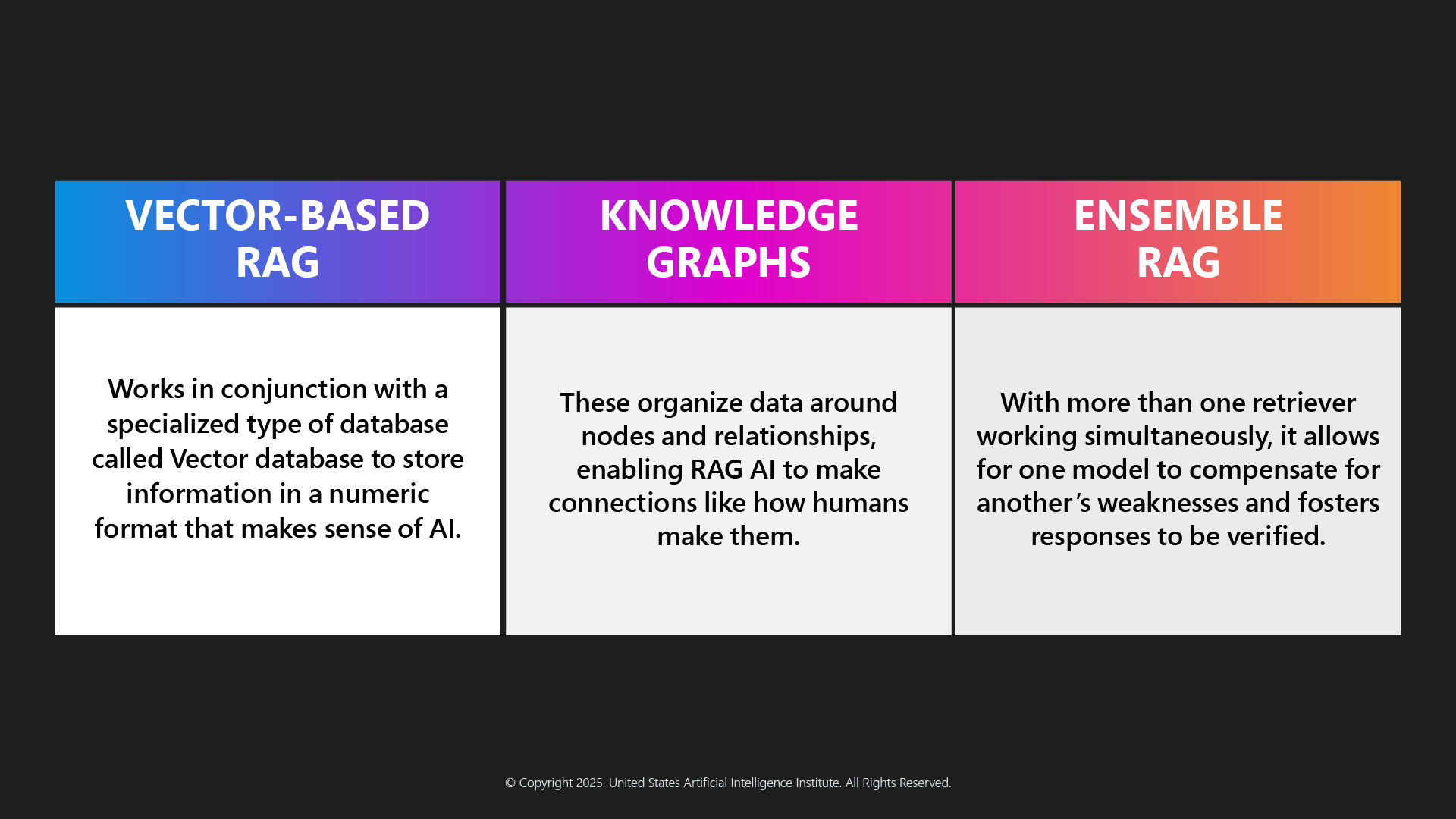

Types of RAG:

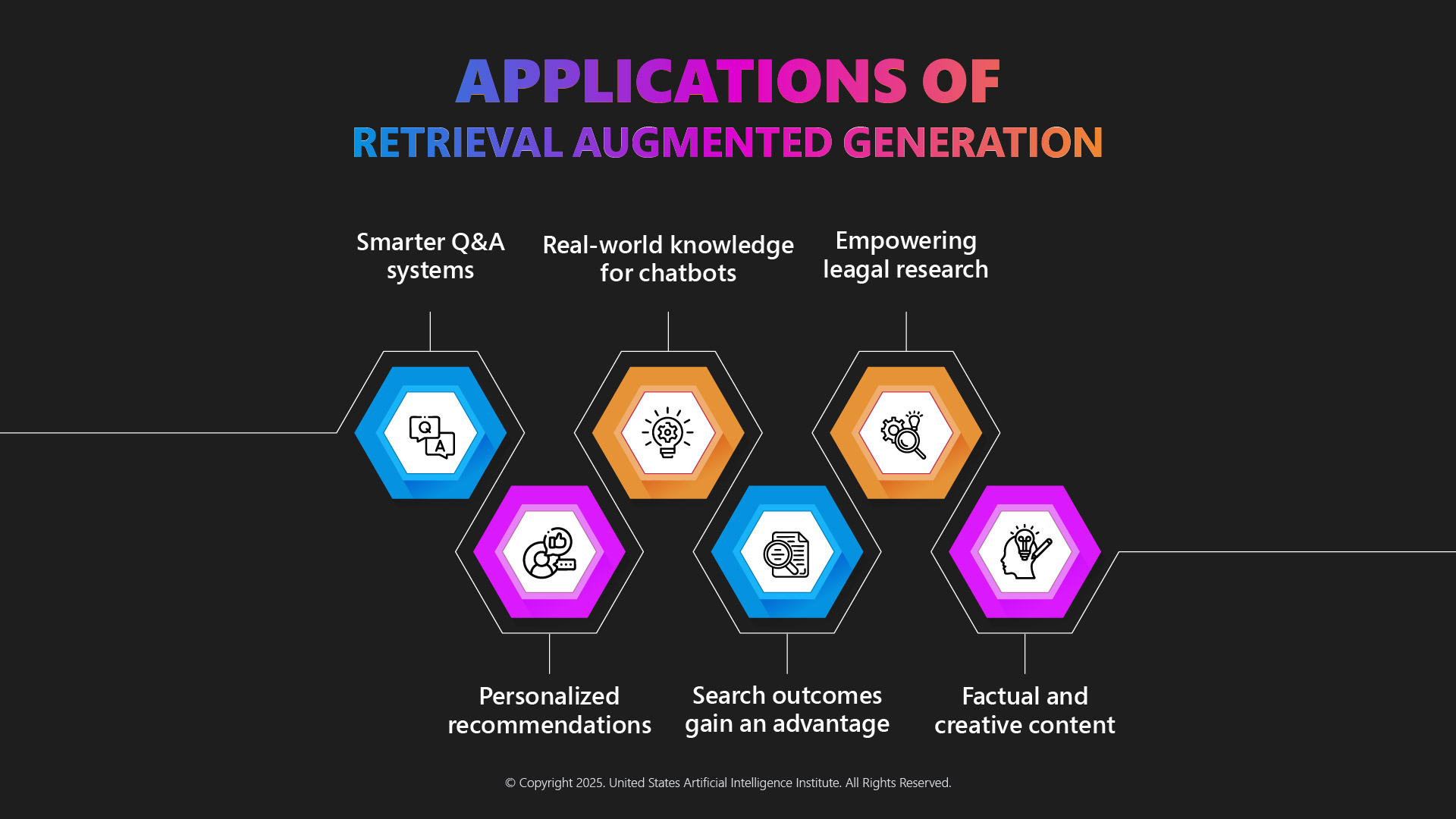

Applications of RAG:

RAG has numerous applications in various industries, including:

Smarter Q&A systems, factual and creative content, real-world chatbot understanding, search outcomes gain, legal research empowerment, and personalized recommendation are a few noted applications for RAG in addition to the above three.

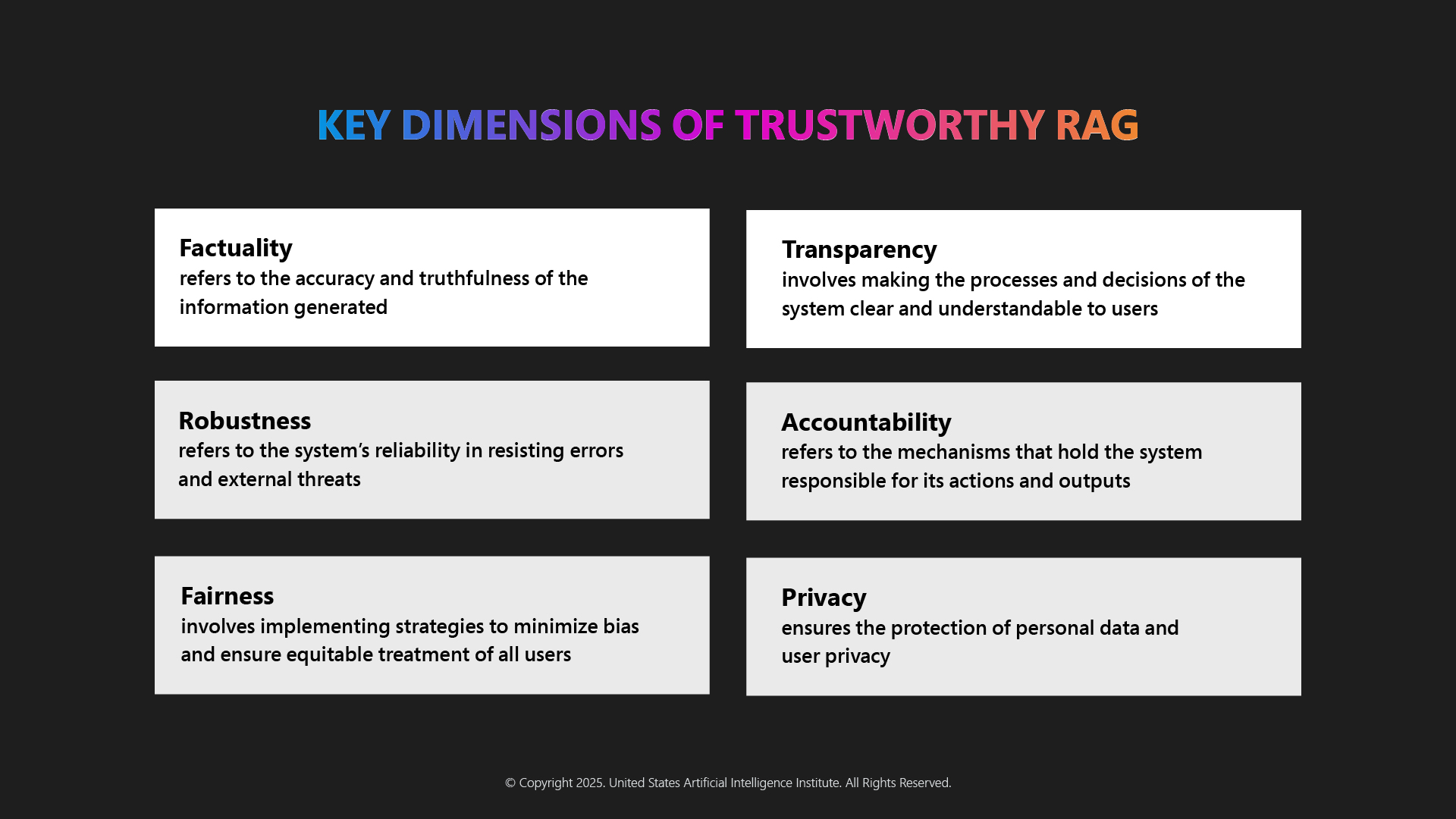

Key Dimensions of Trustworthy RAG

The trustworthiness of LLMs has become a critical concern as these systems are increasingly integrated into applications such as financial systems. Techniques such as RLHF- Reinforcement learning from human feedback, data filtering, and adversarial training have been employed to improve the trustworthiness of RAG LLMs.

Challenges Impacting RAG

With these challenges; the so-called hurdles mentioned above; Retrieval Augmentation Generation (RAG) comes to a standstill. These severely retard the effectiveness of the entire process and halt the performance maintenance over time.

How to Get Some Skin in the RAG Game in the Future?

Retrieval Augmented Generation (RAG) is a revolutionary approach that combines the strengths of knowledge retrieval and text generation to produce more accurate, informative, and engaging text. By leveraging knowledge from a vast database, an AI prompt engineer can enable RAG models to improve accuracy, increase contextual understanding, and reduce overfitting. With its numerous applications in various industries, RAG is poised to transform the field of NLP and beyond. Are you ready to transform the AI world with the most recent addition to your skills pool? Some of the best AI Engineer certifications can help you pave the way forward and realize your dream AI career with an in-depth understanding of RAG, LLMs, natural language processing, and most importantly generative AI. Apart from enhancing your competencies, these credentials can elevate your employability and salary structure manifold. Yes, you read that right- so, start now!

Follow us: